Introduction

Recent years have seen a surge of interest in algorithmic collusion in the global antitrust community. Since the publication of Ariel Ezrachi and Maurice Stucke’s influential Virtual Competition in 2016,[1] which brought algorithmic collusion to the forefront of the world of antitrust, numerous articles, commentaries, and agency reports have been published on this topic. In late 2018, the US Federal Trade Commission (FTC) devoted an entire hearing to the implications of artificial intelligence (AI) and algorithms at its Hearings on Competition and Consumer Protection in the 21st Century. António Gomes, Head of the Competition Division at the Organization for Economic Co-operation and Development (OECD), succinctly summarized the concerns about algorithmic collusion in a 2017 interview, stating that developing artificial intelligence (AI) and machine learning that enable algorithms more efficiently to achieve a collusive outcome is “the most complex and subtle way for companies to collude, without explicitly programming algorithms to do so.”[2]

The possibility of tacit collusion is not hard to see in some highly stylized cases. For example, suppose you and I are the only two online sellers of a homogeneous product and we know that our procurement costs are similar. Because our prices are posted online, we also know each other’s pricing.

Suppose I adopt the following strategy: First, I raise and then keep my price high until you also change your price. If you do not raise your price in response to my price increase, I then drop my price to the cost of the product, or even below the cost. The low price “hurts” both your revenue and mine. I keep this “low price” regime for a period of time and then repeat the process of raising and then lowering prices if you do not raise your prices as well. After several rounds of interaction, it is possible that you realize that I appear to be sending you a signal: raise price with me or suffer financial losses. At that point, you might decide to reciprocate my price increase, given our shared interest in long-term profitability. Notice that during the entire interaction, there are no traditional communications between us. We do not even need to know each other as long as all the conditions are met and the intended learning is somehow achieved.[3] Note the “reward-punishment” element in my algorithm, a point which I will return to.

Many have argued that the threat of algorithmic collusion is real and poses much greater challenges for antitrust enforcement than human coordination and collusion. Maurice E. Stucke and Ariel Ezrachi postulate that AI “can expand tacit collusion beyond price, beyond oligopolistic markets, and beyond easy detection.”[4] Michal S. Gal stated that “a more complicated scenario involves tacit collusion among algorithms, reached without the need for a preliminary agreement among them.”[5] Dylan I. Ballard and Amar S. Naik echoed, “Joint conduct by robots is likely to be different—harder to detect, more effective, more stable and persistent.” [6] The background note by the OECD Secretariat also states that “once it has been asserted that market conditions are prone to collusion, it is likely that algorithms learning faster than humans are also able through high-speed trial-and-error to eventually reach a cooperative equilibrium.”[7] These concerns naturally make one wonder what we should do about the possibility of algorithms reaching a collusive outcome even without companies intending that result. Under this premise, many authors then went on to examine the legal challenges and potential solutions.[8]

At the same time, some have emphasized that autonomous algorithmic collusion in real markets is at most a theoretical possibility at the moment given the lack of empirical evidence. For example, Nicolas Petit argued that “AAI [Antitrust and Artificial Intelligence] literature is the closest ever our field came to science-fiction.”[9] Salil K. Mehra stated that, regarding algorithms, the “possibility of enhanced tacit collusion . . . remains theoretical.”[10] Gautier et al. went as far as to argue that “the hype surrounding the capability of algorithms and the potential harm that they can cause to societal welfare is currently unjustified.”[11]

Turning to the views of antitrust enforcers, one senior U.S. Department of Justice (DOJ) Antitrust Division official stated in 2018 that “[C]oncerns about price fixing through algorithms stem from a lack of understanding of the technology, and that tacit collusion through such mechanisms is not illegal without an agreement among participants.” [12] The Competition Bureau of Canada, while recognizing the constantly evolving technology and business practices, pointed out the lack of evidence of such autonomous algorithmic collusion.[13] Even if algorithmic collusion is possible, the French and German antitrust authorities concluded in their recent Joint Report that “the actual impact of the use of algorithms on the stability of collusion in markets is a priori uncertain and depends on the respective market characteristics.”[14]

In the context of this ongoing debate, the evaluation of the plausibility of tacit algorithmic collusion becomes an important exercise. Insights about how algorithms may or may not come to collude are invaluable in focusing attention on the key legal and economic questions, policy dilemmas, and practical real-world evidence. As we will see, the state-of-the-art research has a lot of insights to offer and a good understanding of this literature is a crucial first step to better understanding the antitrust risks of algorithmic pricing and devising better antitrust policies to mitigate those risks. This is the focus of the second part of this chapter in which I survey and draw lessons from the literature on AI and the economics of algorithmic collusion.[15] Most notably, there is growing experimental evidence in both the AI and the economics literature showing that algorithms can be developed to cooperate and even elicit cooperation from competitors. At the same time, as most of these studies acknowledge, there are many technical challenges. As I elaborate below, these challenges imply that one should be able to uncover attempts to develop collusive algorithms ex post, even without technical expertise on the part of the investigators. Of course, existence of technical challenges does not mean that we should simply dismiss the risk of algorithmic collusion. I explain why we should remain vigilant in light of recent studies and research agenda proposed in the AI field. In terms of antitrust policy implications, I argue that at a minimum, designing and deploying autonomous collusive algorithms should be prohibited even if humans take their hands off the wheel after deploying such algorithms and it is an algorithm that ultimately colludes with others.

A more challenging situation is one where an algorithm that is not designed to collude, but rather simply through profit-maximizing learns to collude with competitors. I discuss some recent experimental evidence showing this type of learning to collude is indeed possible. The good news is that these early studies also demonstrate that it is possible to check for collusive conduct, suggesting that we may have the tool to uncover such learned collusion and the black-box nature of an algorithm itself does not necessarily leaves us completely in the dark.

Next, I explore the emerging area of algorithmic compliance. Most of the policy debate on algorithmic collusion so far has focused on the question of how algorithms may harm competition. I argue in this chapter that AI also holds a great deal of promise in enhancing antitrust compliance and helping us combat collusion, human or algorithmic. Specifically, I discuss some existing proposals, draw additional lessons from the recent AI literature, and present potential technical frameworks, inspired by the current machine learning literature, for compliant algorithmic design.

This chapter is not the first to survey and draw lessons from the relevant literature. Earlier discussions can be found in Schwalbe (2018), Deng (2018), Van Uytsel (2018), and more recently Gautier et al (2020), among others.[16] In addition to covering more recent academic research in AI and economics, much of which appeared after 2019, this chapter also offers a broader coverage by bringing two closely related topics together: algorithmic collusion and algorithmic compliance.

While I mainly focus on the evidence and the lessons from academic literature and do not discuss legal approaches such as per se illegality and evidentiary standards, interested readers can find much insightful discussion in Harrington (2019), Gal (2019), and Ezrachi and Stucke (2020), among others.[17] Another important topic that falls outside the scope of this chapter is the important interaction between algorithmic price discrimination and algorithmic collusion, especially the observation that algorithmic price discrimination could hinder collusion. On this topic, interested readers are referred to the 2018 CMA report on pricing algorithms.[18] Finally, the same CMA report and the “2019 Joint Report on Algorithms and Competition” by the Bundeskartellamt and Autorité de la concurrence also review the relevant literature and lay out the latest thinking of these antitrust agencies.

I. A Brief Introduction to AI and Machine Learning[19]

The antitrust community is largely playing catch-up on the technical aspects of AI and machine learning (ML). As the former Acting Chair of the Federal Trade Commission Maureen K. Ohlhausen put it, “[t]he inner workings of these tools are poorly understood by virtually everyone outside the narrow circle of technical experts that directly work in the field.”[20]

While antitrust practitioners, scholars, and policy makers do not need to know all the nuts and bolts of these technologies, a basic understanding is necessary to assess the implications of the AI/ML research on antitrust issues, especially algorithmic collusion. Through a series of examples, I introduce fundamental concepts in ML. Along the way, I also discuss a wide variety of ML applications in the law and economics fields to build the readers’ understanding of AI/ML. Since the discussion here is aimed at readers without a technical background, I prioritize intuitions and pedagogy over analytical or theoretical rigor.

A. Machine Learning vs. Artificial Intelligence

The distinction between ML and AI is not always made in the antitrust literature. This is largely harmless because the discussion about the antitrust concerns is rarely about the definitions or other technical subtleties. It is still helpful that we understand that ML and AI are different concepts. At a basic level, the difference can be understood as the difference between learning and intelligence. Obviously, learning is not intelligence but rather a way to achieve intelligence. Computer scientist Tom Mitchell gave a widely quoted and more formal definition of a machine learning algorithm: “a computer program is said to learn from experience E with respect to some class of tasks T and performance measure P if its performance at tasks in T, as measured by P, improves with experience E.”[21] To put it simply, machine learning algorithms are computer programs that learn from and improve with experiences. The definition of artificial intelligence, on the other hand, focuses on the question of what intelligence is. In the book Artificial Intelligence: A Modern Approach, the authors listed eight definitions of AI in four categories along two dimensions: (1) thinking and acting humanly, and (2) thinking and acting rationally.[22] Although there are other approaches to obtaining AI, machine learning has become the dominant one in recent years.[23]

B. How Do Machines Learn?

1. Supervised Learning: Learning Through Examples

Econometrics and statistics are now routinely used in antitrust litigation and merger review. As a result, many antitrust practitioners have a basic understanding of techniques. Linear regression, one of the most common analytical tools used in antitrust, turns out to be a machine learning algorithm. While economists and even statisticians seldom use the term “machine learning,” computer scientists do. This is rapidly changing as ML is quickly gaining popularity outside of computer science.

The concept of a regression is simple and intuitive. We do “mental” regressions all the time. For instance, we all know roughly the average temperature of the summer where we have lived for 10 years. When we compute that average, what we do is, in essence, a regression, albeit a very simple one. The key ingredient for such a calculation is a collection of what we could call examples i.e., data on temperature in the summer. Effectively, these examples guide or supervise how we learn. Perhaps not surprisingly, the related ML techniques (regressions included)—i.e., those that rely on the availability of examples—are called supervised learning methods. And the examples are also known as training data or a training sample in the sense that they allow us to train the learning process. You can also see why it makes sense to call them ML methods. They at least mimic in concept how a human learns about our world.[24]

Example: To antitrust attorneys and economists, the most familiar application of regression is probably a model of prices of the product in question. The model relates the prices to the observed drivers of prices (supply and demand factors). The quantity of interest is not limited to prices, however. In a merger analysis, for example, economists may also build regression models for market shares. In fact, regression analysis is rather common in today’s antitrust cases. Not surprisingly, there are many references on regressions written for the antitrust audience.[25]

Example: As more and more electronic documents are preserved and become available, identifying relevant documents in the legal discovery process has become a costly endeavor. Against this backdrop, a set of supervised learning techniques, generally called predictive coding, has been employed to facilitate this process. A prototypical predictive coding approach works as follows. First, a subset of potentially relevant documents is selected. Then human experts review a random subsample of these selected documents and mark the relevant and responsive documents (together with associated metadata such as the author and date). These marked documents provide the examples necessary for the application of supervised learning methods.[26] Since the goal is to label a document as either relevant or not, the problem that predictive coding tries to solve is also known as classification.

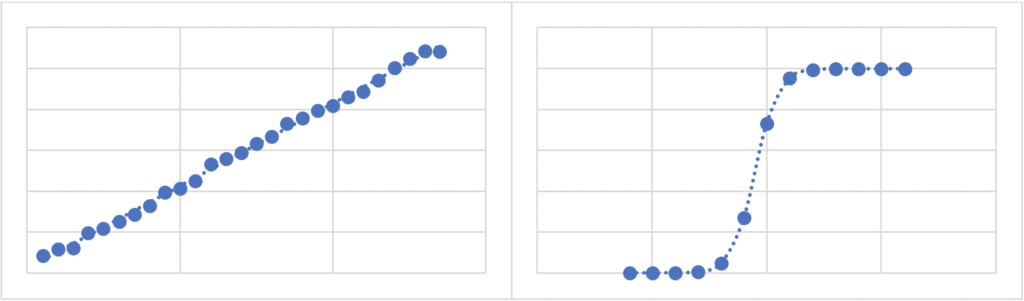

Example: Artificial neural network (ANN) has become a buzzword in the recent AI/ML literature, as well as in the antitrust debate, as has the closely related concept of deep learning or deep neural network. ANN has seen a wide variety of successful applications ranging from image recognition to machine translation. But as with any technical jargon, these terms are extremely vague to anyone outside the technical field. It turns out that the basic ANN is just a regression (with technical bells and whistles) and hence another supervised learning method. Figure 1 shows two possible relationships between two quantities. On the graph on the left, the two quantities appear to have a linear relationship in that they appear to move along a straight line, although not perfectly. The graph on the right shows a nonlinear relationship. This is why a regression model that reflects a linear relationship is called a linear regression and a regression model that reflects a nonlinear relationship is called a nonlinear regression.[27] ANN is a type of nonlinear regression, flexible in that it can capture different and complex shapes of nonlinearity. However, this flexibility comes with a cost. Typically, for ANNs to work well, a large number of examples is required. It has been argued that, if ANNs are used to design business decision algorithms, the complexity of this technology could significantly complicate antitrust enforcement efforts.[28] As I have argued elsewhere, whether complex techniques such as ANN are necessarily superior in designing potentially collusive algorithms is unclear.[29]

Figure 1: Illustration of a linear and nonlinear relationship

Example: Algorithmic price discrimination has also been a focus of recent discussion in the antitrust literature. The idea is that as companies collect more and more personal data on their customers, they may be increasingly capable of price discrimination among them. In economic terms, companies may be able to use personal data to gauge individual willingness to pay. The availability of such data, as well as customers’ past purchasing/spending behavior (again, examples), could be used to train supervised learning methods to better predict consumer behavior and hence enable companies to offer personalized product options and pricing.[30]

2. Unsupervised Learning: Learning Through Differences

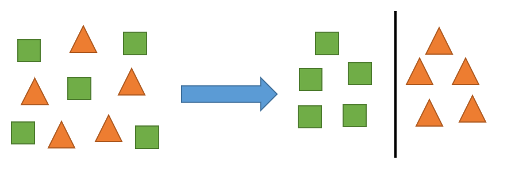

We as humans engage in other cognitive tasks. Consider the following example. Suppose there is a mix of triangles and squares. The task is to put different shapes into separate groups. While this task is incredibly trivial for us, let’s think about exactly how our brains work in such a situation. One plausible hypothesis is that we have “a mental ruler” that measures pairwise differences of—or, using a slightly more technical term, distances between—the shapes. We then put the objects in one group when their differences are “small” and in a different group when the differences are “large.” Figure 2 illustrates this. Note that the task does not require us to know what a triangle or a square look like. In other words, we do not need a set of shapes with labels (triangle vs. square) in order to separate them. The absence of such labelled training data is the hallmark of what is known as unsupervised learning. And the grouping exercise is known as clustering in ML jargon. In these types of learning, the concept of distance is a critical ingredient and underlies even the most sophisticated unsupervised learning techniques.

Figure 2: Classification as unsupervised learning

Example: In document review, another objective is to group documents based on certain criteria even before we know whether a document is relevant. For example, one may want to group documents by author or date, or, in more complex cases, by content through the use of other unsupervised ML algorithms.

Example: Novelty or anomaly detection, which identifies the few instances that are different from the majority, is conceptually similar to clustering. In many industries (credit card, telecommunications, etc.), anomaly detection is hugely important in detecting fraud. One simple but powerful idea behind anomaly detection is to start with characterizing the “norm.” For example, once we use a credit card long enough, the card company is able to build a personal profile for our spending behavior. If we have never made a purchase in a foreign country when a transaction just took place in that location, that transaction may be flagged as an anomaly and the card company could issue an alert. In the antitrust domain, similar techniques can be used to detect and monitor cartel formation. I elaborated on how ML/AI could be leveraged to do so in another article.[31] As an example, Joseph Harrington has argued that a sharp increase in the price-cost margin could signal the onset of a cartel.[32] Such a price-cost margin “screen,” as it is commonly known in the cartel detection literature, fits nicely in the unsupervised learning framework. Thus, despite the concerns mentioned earlier that ML/AI could facilitate collusion, the very same set of tools might be used to deter and prevent cartel formation. This is a point I will elaborate below in the context of algorithmic compliance.

3. Reinforcement Learning: Learning Through “Trial and Error”

Another type of machine learning that is particularly relevant to the discussion of algorithmic collusion is known as reinforcement learning (RL). Consider the case of a child learning about different animals. When a child picks up a toy elephant but calls it a giraffe, we would correct her. When she gets it right, we congratulate and reward her. And we repeat that process until she gets it. This process is probably the most common way of reinforcing proper behavior.

Andrew G. Barto and Thomas G. Dietterich give another example. Imagine that you are talking on the phone where the signal is not very good, but moving around to find the right spot.[33] Every time you move, you ask your partner whether he or she can hear you better. You do this until you either find a good spot or give up. Reinforcement learning mimics this type of “trial and error” process. Notice here that the information we receive does not directly tell us where we should go to obtain good reception. In other words, we do not have a collection of examples of location or reception as in a supervised learning case, at least not in a new environment. We make a move and then assess our current situation. As Barto and Dietterich put it, “[w]e have to move around—explore—in order to decide where we should go.” This is a main difference between RL and supervised learning.

Some of the most prominent success stories of RL come from the field of game play. AlphaGo, an RL algorithm, beat world champions at the ancient game of Go in 2016 and 2017. AlphaGo was, however, recently defeated by the next generation of the algorithm AlphaGoZero, losing all 100 games played.[34] In fact, AlphaGoZero uses RL to start from scratch (hence the zero in the name of the algorithm) and trains itself by playing against itself. In the emerging antitrust and AI literature, Ittoo and Petit (2017) argue that “RL is a suitable framework to study the interaction of profit maximizing algorithmic agents because it shares several similarities with the situation of oligopolists in markets.”[35] Many economic studies on algorithmic collusion, that I will discuss below, use RL.

Particularly relevant to algorithmic collusion is the multi-agent learning problem. This is where multiple parties are involved in the learning process and their behavior directly affects each other. For example, in a zero-sum game, if one player wins, another player must lose. In a coordination game such as basketball, the incentives of the players on the same team are generally aligned. In contrast, in a positive-sum game such as prisoner’s dilemma, a stylized model I will discuss in detail below, even though the parties understand that they could achieve a higher overall and individual payoff if they coordinate their behavior, there is a temptation to defect. The last situation resembles the problem cartel members may face and is the most familiar to antitrust attorneys and economists. Research on multi-agent learning in the prisoner’s dilemma type of situation is particularly pertinent to our understanding of algorithmic collusion. We will return to this topic in the next section.

4. Explainable AI

In the recent years, there has been a rapidly growing interest in explainable AI in both academia and the private sector. As the name suggests, explainable AI aims to make algorithmic decision-making understandable to humans.[36] Notably, the Defense Advanced Research Projects Agency (DARPA) sponsors a program called XAI (Explainable Artificial Intelligence).[37] The organization FATML (Fairness, Accountability, and Transparency in Machine Learning) also aims to promote the explainable AI effort. Recent privacy regulations such as GDPR have also put a spotlight on explainability. While we still have a long way to go in the explainable AI research, the industry and academic interest is a promising starting point.[38]

Some of the commercial interest in explainable AI comes from the commercial lending industry because of the regulation and the need to explain lending decisions to consumers, especially when the decision is made by machine learning models. It should be no surprise that the same need for explainability goes well beyond the lending industry. For example, being able to explain algorithmic decisions or recommendations is equally important in the medical and health care domains. Leveraging explainable AI can and should also be an important part of the research program for antitrust compliance by design, a concept I will elaborate below.

The AI research community has proposed several ideas to help achieve interpretability and explainability of AI. Two common approaches, rooted in the technical aspects of AI, are (1) the use of inherently interpretable algorithms (known as “white-box algorithms”) and (2) the use of clever backward engineering (also known as post hoc methods).[39] Naturally, there is not a single definition of explainability, and different domains may find different definitions acceptable. I will argue below that, in the context of algorithmic compliance, an algorithm’s ability to explain and answer why, why not, and what-if questions is particularly helpful.

II. What Do We Know About Algorithmic Collusion?[40]

A. Cartels’ Incentive Problem

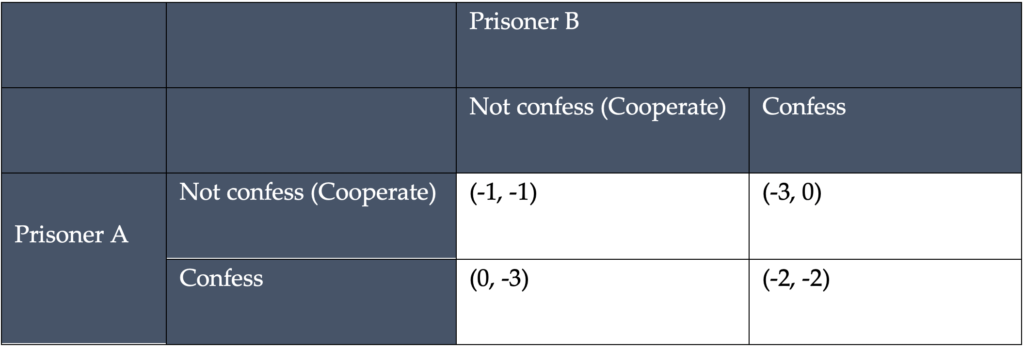

To better understand the problems a cartel must solve to sustain an agreement to restrict competition (e.g., raise prices or reduce output), it is instructive to look at the well-known prisoner’s dilemma (PD). Imagine two accomplices of a crime are being interrogated in separate rooms and they cannot communicate. They must decide whether to confess to the crime and hence expose the accomplice. Table 1 shows the consequences of their decisions.

Table 1: A Prisoner’s Dilemma: Understanding the incentive problem of a cartel

The two rows and two columns in Table 1 represent the two prisoners and their two possible choices. For example, the cell (-1, -1) tells us that if neither confesses, each would get one year in prison. Similarly, if Prisoner A does not confess but Prisoner B does, then Prisoner A gets three years in jail and Prisoner B goes free; this corresponds to the upper right cell (-3, 0). Since the situation is symmetric, the lower left cell is (0, -3) and the penalty is reversed. Finally, if both confess, then each would get two years (as shown in the lower right cell).

Given these numbers, it is clear from a joint-interest perspective that the best outcome is (-1, -1), a total of two years. And the prisoners can achieve that by “cooperating” (i.e., not confessing). Unfortunately for the prisoners, since confessing is the rational move regardless of what the other does, both will end up confessing, leading to two years for each, an outcome strictly worse than the “cooperative” outcome. It is not surprising that cartel members face a similar type of incentive problem. They are both better off if they cooperate (e.g., raise prices or reduce output). But at the same time, if I know that my competitors are raising prices, I have an incentive to lower mine to steal the business and increase my revenue. Since a formal cartel contract is not enforceable in most if not all jurisdictions, they have to find other and often imperfect ways to implement their agreement.

A critical point is that solving this incentive problem is key to the success of a cartel: the use of an algorithm does not magically remove this fundamental incentive problem that a cartel faces. And unlike the “one-shot” situation in the standard prisoner’s dilemma, competitors interact with each other repeatedly in the market. It turns out that in repeated interactions, there is “more hope” that firms can learn to cooperate. In fact, repeated interaction is an important reason that tacit collusion emerges in the stylized example discussed earlier in the chapter.

B. The AI Literature

Is there any evidence that computer algorithms can (tacitly) collude? We have not seen tacitly colluding robots in real markets. The infamous Topkins and Trod cases involve the use of pricing algorithms that implement human agreements.[41] However, they are not the focus of this chapter.

But there is growing theoretical and experimental evidence showing that certain algorithms could lead to tacit coordination. In the AI field of multi-agent learning, there is an active literature on designing algorithms that can cooperate and even elicit cooperation in social dilemmas such as the PD. The AI researchers’ goal is, of course, not to design evil collusive robots. Rather, they are interested in designing AIs that have the ability to cooperate with each other and with humans for social good.[42]

One algorithm that has been found to be conducive to cooperative behavior in experimental settings is the so-called tit-for-tat (TFT) algorithm.[43] This strategy starts with cooperation, but then each party will simply copy exactly what the opponent did in the previous period in repeated interaction. Intuitively, if two opponents start by cooperating, then the very definition of the TFT algorithm dictates their continued cooperation. But will competitors have an incentive to deviate from cooperation? The answer is that they might not, if they realize that despite the higher profit they could obtain by cheating in the current period, they will have to compete with others and hence generate lower profit in the future. While not guaranteed, if the firms care enough about future profitability, they might not find it worthwhile to deviate.

The TFT algorithm, despite its simplicity, intuitive appeal, and some experimental success, has a number of limitations. For example, to implement TFT, one needs to know what the competitors have done (because TFT copies the competitors’ behavior) and the consequences of future interactions (because they need to assess if it pays to cooperate). In the real world, firms typically do not possess that information, except in certain special cases.[44]

In recent years, there has been more research that aims to relax various assumptions and construct more robust cooperative algorithms. In a study published in 2018, a team of researchers designed an expert system (a type of AI technology) that can cooperate with opposing players in a variety of situations. Intuitively speaking, an expert system requires two components: a pool of “experts” or strategies and a mechanism to choose a particular subset of strategies given the information available to the AI system. Among the pool of experts in their algorithm are TFT-style “trigger” strategies. The researchers found that although the previous version of their expert system (codenamed S++) was better than many other algorithms at cooperating, the performance of a modified algorithm (codenamed S#) is significantly better, especially when playing against humans, because it is equipped with the capability to communicate (through costless “cheap” talk based on a set of pre-programmed messages).[45] But the engineering process is by no means easy or obvious. In addition to the capability to communicate, the researchers also attribute the success of their algorithm to a carefully selected pool of experts and an optimization procedure that is “non-conventional.”[46] We will discuss the implications of this study, especially the algorithm’s ability to communicate and the associated technical challenges, on antitrust and compliance below.

Even more recently, two researchers developed algorithms that can cooperate with opponents in similar social dilemmas.[47] One of their algorithms was, in fact, inspired by the TFT algorithm. Specifically, the researchers tried to relax the strong information requirements of the naïve TFT algorithm. Another recent study adopted an interesting approach to design an algorithm that promotes cooperation. Its idea is to introduce an additional planning agent that can distribute rewards or punishments to the algorithmic players as a way to guide them to cooperation, analogous to an algorithmic hub and spoke scheme.[48] Another group of researchers recently proposed an algorithm that explicitly takes into account the opponent’s learning through interactions and found that their algorithm worked well in eliciting cooperative behavior.[49] Yet other researchers tried to make algorithms learn to cooperate with others by modifying the algorithm’s objective. For a pricing algorithm, the most natural objective would be to maximize profits. But one study shows that by adopting an objective that “encourages an [AI] agent to imagine the consequences of sticking to the status-quo,” their algorithm is able to learn to cooperate “without sharing rewards, gradients, or using a communication channel.”[50] The researchers credit this capability to the fact that the “imagined stickiness ensures that an agent gets a better estimate of the cooperative or selfish policy.”[51] Finally, a carefully designed reinforcement learning algorithm, called “Foolproof Cooperative Learning” (FCL), was recently developed and shown to learn to play TFT, without being explicitly programmed to do so.[52] In the researchers’ words, FCL, “by construction, converges to a Tit-for-Tat behavior, cooperative against itself and retaliatory against selfish algorithms” and “FCL is an example of learning equilibrium that forces a cooperative behavior.”[53] New AI research on the topic of machine-machine and machine-human cooperation continues to appear.

With growing experimental evidence that algorithms can be designed to tacitly cooperate, the next question naturally becomes whether a collusive pricing algorithm inspired by this research is available for use in the real world. The answer is that despite the promising theoretical and experimental results discussed above, we have a long way to go.

Several limitations are worth keeping in mind. First, almost all of these studies focus on two players (a duopoly). It is well recognized that everything else being equal, as the number of players increases, collusion, tacit or explicit, becomes more difficult. Second, the type of games (e.g., repeated prisoner’s dilemma and its variants) is simplistic and the universe of possible strategies in these experimental studies are rather limited, especially when compared to the real business world.[54] Third, most of these experimental studies assume a stable market environment. For example, in most AI studies, the payoffs to the AI agents, as well as the environment in which AI agents operate, are typically fixed.[55] This is a significant limitation because demand variability and uncertainty is not just a norm in the real world, but also has been long recognized by economists to have important implications on how cartels operate. For example, with imperfect monitoring, if the market price is falling, cartel firms may have a hard time figuring out whether the falling price is due to cheating or to declining demand (“a negative demand shock”). In fact, the economic literature shows that a rational cartel would need to internalize the disruptive nature of demand uncertainty when the cartel monitoring is imperfect.[56] Interestingly, as we will discuss in the next section, recent economic studies have shown that reduced demand uncertainty, achieved by the use of algorithms, for example, may actually make a cartel more difficult to sustain.

Another important observation from the AI research is that the algorithms being designed are not necessarily what economists call “equilibrium” strategies. Equilibrium strategies are “stable” in the sense that, if you know that you and your competitors adopt this strategy, none of you would have the incentive to switch to another strategy.[57] That is not the case for some of the algorithms recently developed by AI researchers.[58] In a recent study mentioned earlier, despite the promising experimental findings, the researchers acknowledge that unless an algorithm is an equilibrium learning strategy, it can be exploited by others, meaning that players may have an incentive to move away from their proposed algorithm.[59] This observation has a powerful implication: unless firms are fully committed to a “collusive” algorithm that is not an equilibrium strategy, there will be a temptation for the (rational) firms to change their strategy and hence potentially disrupt the status quo or a potentially tacitly collusive outcome.

Also relevant is whether the AI agents are symmetric; in other words, whether the opposing players have identical payoffs if they adopt the same strategies. In fact, almost all the AI studies that use the repeated prisoner’s dilemma or its variants focus on the symmetric case. As I will discuss in the next section, the existence of various types of asymmetry (cost, market share, etc.) tends to make reaching a cartel agreement harder and the use of algorithms is unlikely to change that. Similar to the case of time-varying demand, economists have shown that a rational cartel may also need to explicitly take asymmetry into account and adapt its pricing arrangement accordingly.[60] So there are good reasons to suspect that the AI algorithms designed under symmetry do not necessarily fare well in more realistic, asymmetric situations.

Given all these real-world complications, it is not surprising that empirically, as of now, there is no known case of tacitly colluding robots in the real world. But at the same time, the AI literature offers several insights that inform us how best to approach the antitrust risk of algorithmic collusion. The most significant, and perhaps also the most obvious is that designing algorithms with proven capability to tacitly collude in realistic situations is a challenging technical problem. The large-scale study (Crandall et al, 2018) started with 25 algorithms and found that, in a variety of contexts, not all of them learned to cooperate effectively, either with themselves or with other algorithmic players. In fact, the researchers identified the more successful algorithms only after extensive experiments and careful “non-conventional” design choices. They highlighted a number of technical challenges. For example, they pointed out that a good algorithm must be flexible in that it needs to learn to cooperate with others without necessarily having prior knowledge of their behaviors. But to do that, the algorithm must be able to deter potentially exploitative behavior from others and, “when beneficial, determine how to elicit cooperation from a (potentially distrustful) opponent who might be disinclined to cooperate.”[61] The researchers went on to state that these challenges often cause AI algorithms to defect rather than to cooperate “even when doing so would be beneficial to the algorithm’s long-term payoffs.”[62] Another paper further noted “[l]earning successfully in such circumstances is challenging since the changing strategies of learning associates creates a non-stationary environment that is problematic for traditional artificial learning algorithms.”[63] One of the researchers of this study reiterated in a 2020 article that “despite substantial progress to date, existing agent-modeling methods too often (a) have unrealistic computational requirements and data needs; (b) fail to properly generalize across environments, tasks, and associates; and (c) guide behavior toward inefficient (myopic) solutions.”[64] Indeed, each one of the AI studies reviewed above had to use clever engineering to confront and solve some of these challenges.

In the next section, I will discuss and comment on some important, recent experimental studies in which standard reinforcement learning pricing algorithms are shown to be able to learn to collude with one another without any special design tweaks or instructions from the human developers. Given the limitations to be discussed below and the early stage of the recent research, however, it is safe to conclude that to design an algorithm that has some degree of guaranteed success in eliciting tacit collusion in realistic situations and timeframes, the capability to collude most likely needs to be an explicit design feature.[65]

This means that there may very well be important leads that the antitrust agencies and even private litigants could look for in an investigation or a discovery process.[66] Several types of documents are of particular interest. These include any internal document that sheds light on the design goals of the algorithm, any documented behavior of the algorithm, and any document that suggests that the developers had revised and modified their algorithm to further the goal of tacit coordination. Another type of document that should raise red flags is any marketing and promotional material that suggests that the developers may have promoted the algorithm’s capability to elicit coordination from competitors. Note that it is not necessary for the investigators to have an intimate understanding of the technical aspects of the AI algorithm to look for such evidence.[67]

C. The Economics Literature

The economics literature that explicitly examines algorithmic collusion is more limited than the AI literature surveyed above but growing rapidly.[68] In an experimental study, a team of researchers recently showed that their algorithm successfully induced human rivals to fully collude in a simulated duopoly market with a homogenous product and that such a collusive outcome was reached relatively quickly.[69] Despite the various limitations, many of which are discussed in the previous section, it is one of the strongest pieces of evidence that supports the algorithmic collusion hypothesis in economics. But an important observation relevant to our discussion is that their algorithmic design was based on the so-called zero-determinant strategy (ZDS) that is known to be able to extort and elicit cooperation from opponents in iterated prisoners’ dilemmas.[70] I argue below that this type of algorithmic design approach should raise red flags regardless of the fact that it is the algorithms, not the humans, that ultimately collude with others.

Turning to algorithms that are not explicitly designed to collude or elicit collusion, one early study showed that a particular type of RL algorithm called Q-learning could lead to some degree of imperfect tacit collusion in a quantity-setting environment.[71] More recently, another study reported a similar finding in a price-setting environment.[72] Both are important contributions and demonstrate the theoretical possibility of algorithmic collusion, when collusion is not an explicit design goal.[73] The strongest evidence comes from another even more recent study, in which the researchers found that their RL algorithms “consistently learn to charge supra-competitive prices, without communicating with one another . . . This finding is robust to asymmetries in cost or demand, changes in the number of players, and various forms of uncertainty.” [74]

One of the most interesting observations in this study is that the RL algorithm appears to have learned to punish the “cheater” and reward the “collaborator.”[75] This type of reward-punishment strategy has been labeled as problematic collusive behavior and is the defining characteristic of collusion by Harrington (2019).[76] And it is this observation that led the researchers to conclude that the RL algorithm has learned to tacitly collude.

The finding of the RL algorithm’s robust tendency to tacitly collude is concerning. A common caveat for such an experimental study, however, is that the artificial market is too simplistic relative to any real market. Other challenges and limitations have already been discussed in the previous section.[77] I highlight one difficulty here.[78] The RL algorithm in the researchers’ experiments takes an average of 850,000 periods of training to learn to “tacitly collude.”[79] Although that amounts to less than one minute of CPU time, it means that, in the real world, the algorithm “learns” after over 2,300 years if they change prices daily, or over 1.5 years if they change every minute. And this is after the researchers limited the set of possible prices the algorithm could choose from.[80] It is unlikely that any company would be amenable to allow the algorithm to trial-and-error with actual prices, let alone for years. Future technological advances, especially in markets where firms’ algorithms interact at (ultra-)high frequency, may significantly reduce the learning time, but the point is that a “collusive” algorithm is arguably less relevant to the antitrust community if it takes an unrealistically long time to learn to collude. Indeed, the longer the learning takes, the more likely the market structure will change during the learning stage. For example, the number of competitors may change due to entries and exits; new technologies may emerge and disrupt an industry; even the macroeconomic environment may change, all of which create a “non-stationary” environment, making it difficult for an algorithm to learn. Recognizing this limitation, the researchers focused entirely on the algorithmic behavior after the training process had completed.[81] In the researchers’ words, this means that the learning happens “off the job.”

If the training is done offline in a lab environment, the developers could in principle assess algorithmic behavior in a controlled environment as well. In fact, the researchers’ demonstration that detecting collusive behavior is possible shows that the black-box nature of algorithms does not necessarily inhibit our understanding of algorithmic behavior. We do need to keep in mind, however, that algorithmic behavior manifested in controlled experiments will be driven by the assumptions imposed in that environment and as a result, may or may not materialize in real markets.

Another paper (Klein, 2019) follows a similar line of research and shows that Q-learning can also lead to tacit collusion in a simulated environment of sequential competition.[82] Among other assumptions, the study finds that the algorithmic behavior depends critically on the number of prices the AI algorithm is allowed to choose from. Specifically, the algorithms do not consistently learn to price optimally (i.e., play “best response”) with respect to each other. They do so in 312 out of 1,000 simulation experiments (31.2%) when they have seven prices to choose from and only 10 out of 1,000 experiments (1%) when they have 25 prices to choose from.[83] Furthermore, while the algorithm also exhibits the reward-punishment behavior similar to that documented by the previous study when it learns to price at the fully collusive (i.e., monopoly) level, the researcher was able to document this behavior in only 13.5% of their experiments when there are seven prices to choose from and only 1.9% (with at most one price increment away from the monopoly level) when there are 13 possible prices.[84] To put the finding of algorithmic collusion in perspective, the author noted that “for the environment considered in this paper, humans are expected to show a superior collusive performance because tacit collusion is relatively straightforward.”[85] As the researcher recognizes, “while this [the finding of the paper] shows that autonomous algorithmic collusion is in principle possible, practical limitations remain (in particular long learning and required stationarity).”[86]

Another interesting recent article (Salcedo, 2016) provides a set of sufficient conditions under which the use of pricing algorithms leads to tacit collusion.[87] The author considered an algorithmic version of an “invitation to collude.” Three conditions must be true for algorithmic collusion to materialize in his framework. First, competitors should be able to decode each other’s pricing algorithms. Second, after decoding others’ algorithms, the competitors should be able to revise their own pricing algorithms in response. Third, firms should not be able to revise or change their algorithms too fast.[88] Intuitively, under these conditions, a firm could essentially communicate its intent to collude by adopting a “collusive” algorithm and letting the competitor decode it. Once this invitation to collude is decoded, the competitor can then choose to follow the lead or not. When making the decision, the firm on the receiving end will naturally be concerned about the possibility that the invitation is no more than a trick and that once that firm starts to cooperate, the competitor would take advantage of it by immediately reversing course (say, by immediately lowering prices to steal customers away). This is where the third condition comes into the picture. If the firms understand that changing the strategy takes time, then the receiving firm’s concern would be alleviated. Schwalbe (2018) has argued that the situation postulated by Salcedo is an example of direct communication (through the decoding of an algorithm), rather than tacit and is thus equivalent to explicit collusion.[89]

1. Algorithms and Structural Characteristics

One strand of economic literature that has received much attention in the antitrust community identifies the structural characteristics that tend to facilitate/disrupt collusion. We’ve already discussed some of these when we assessed the limitations of the AI studies. In this section, we provide a more systematic discussion on how algorithms could impact these market characteristics.

A partial list of such structural characteristics that some have argued tend to facilitate collusion includes the following:

- Higher market transparency

- More stable demand

- Small and frequent purchases by customers

- Symmetric competitors

- Fewer competitors

- More homogeneous products

- Higher barriers to entry

Market transparency is one obvious characteristic that an algorithm could potentially enhance. Some have argued that algorithm-enhanced market transparency will in turn facilitate collusion. For example, the Autorité de la Concurrence and Bundeskartellamt stated in their “2016 Joint Report on Competition Law and Data” that “. . . market transparency . . . gains new relevance due to technical developments such as sophisticated computer algorithms. For example, by processing all available information and thus monitoring and analysing or anticipating their competitors’ responses to current and future prices, competitors may easier be able to find a sustainable supra-competitive price equilibrium which they can agree on.”[90] In their recent “2019 Joint Report on Algorithms and Competition”, the agencies again noted that “[m]arket transparency for companies facilitates the detection of deviations and thus can increase the stability of collusion. By allowing a greater gathering and processing of information, monitoring algorithms collecting these data could thus foster collusion.”[91] Francisco Beneke and Mark-Oliver Mackenrodt also stated that “coordinated supra-competitive pricing is in many settings difficult due to uncertainties regarding costs of competitors and other variables. If the algorithms can learn how to make accurate predictions on these points, then the need to solve these problems with face-to-face meetings may disappear. . . . One common source of equilibrium instability in oligopoly settings is said to be changes in demand.”[92]

Recent empirical evidence shows that increased transparency may have indeed led to potential tacit collusion in real markets.[93] While these arguments highlight the ways in which transparency could facilitate collusion, it is critical that we recognize that under some conditions, transparency can also undermine it. In fact, firms in several cartels took pains to limit the information they shared and maintained a certain degree of privacy. For example, in the isostatic graphite cartel, firms would enter their own sales on a calculator that was then passed around the table so that only the aggregate sales were observed. Thus, they could compute their own market shares but not their competitors’. In the plasterboard cartel, firms set up a system for exchanging information through an independent third party that would consolidate and then circulate the aggregate information among the firms.[94]

Sugaya and Wolitzky (2018) provided an economic theory to explain why privacy (i.e., less transparency) can be beneficial to the sustainability of a cartel.[95] Specifically, they considered cartels that engage in market/customer allocation; that is, cartel firms agree to serve only their own “home” market and not to sell to the competitors’ home markets. The basic intuition is as follows: When the demand in your home market is strong (and hence you have an incentive to raise your prices), transparency about the (higher) demand in your home market, your costs, and your prices would give the other cartel firms more incentive to enter your markets simply because there would be more to gain. This incentive is stronger, the less patient the competitors are. Along similar lines, Miklos-Thal and Tucker (2019) build a theoretical model to show that while “better forecasting allows colluding firms to better tailor prices to demand conditions, it also increases each firm’s temptation to deviate to a lower price in time periods of high predicted demand.”[96] This result leads them to conclude that “despite concerns expressed by policy makers, better forecasting and algorithms can lead to lower prices and higher consumer surplus.”[97] Under a different economic model, O’Connor and Wilson (2019) reached the same conclusion that greater transparency and clarity about the demand has ambiguous effects on consumer welfare and firm profits. These authors therefore call for a cautious antitrust policy toward the use of AI algorithms.[98]

Sugaya and Wolitzky (2018) gave an analogy that should make it intuitively clear why more information does not necessarily facilitate collusion. Imagine there are two sellers at a park.[99] They can bring either ice cream or umbrellas to sell. Ice cream is in demand on sunny days and umbrellas are in demand on rainy days, and if both sellers bring the same good, they sell at a reduced price. In the absence of weather forecasts, it is an equilibrium for one seller to bring ice cream, the other to bring umbrellas as each expects to receive half the monopoly profits. But if the two sellers know the weather with a high degree of certainty before they pack their carts, they would both have incentive to bring the in-demand good and end up competing and splitting the reduced profits. Thus, in this simple example, transparency about the weather (though not transparency about the firms’ actions) actually hinders collusion.

Some have also argued that an algorithm’s speed could prevent cartels from cheating because any deviation from a tacitly or explicitly agreed-upon price could be detected and potentially retaliated against immediately.[100] For example, OECD’s “Report on Algorithms and Collusion” states that “the advent of the digital economy has revolutionized the speed at which firms can make business decisions. . . . If automation through pricing algorithms is added to digitalization, prices may be updated in real-time, allowing for an immediate retaliation to deviations from collusion.”[101] Beneke and Mackenrodt echoed: “… price lags will tend to disappear since pricing software can react instantly to changes from competitors. Therefore, short-term gains from price cuts will decrease in markets. . . .”[102] The observation that faster reaction reduces the incentive to deviate in the first place under perfect monitoring has been recognized in the economic literature as well. But economists have also shown that faster responses can be a double-edged sword when it comes to cartel stability under imperfect monitoring. A seminal article in this literature (Sannikov & Skrzypacz, 2007) shows that under some conditions, if market information arrives continuously and firms can react to it quickly (for instance, with flexible production technologies), collusion becomes very difficult.[103] Why is that?

Recall that earlier I discussed a situation where consumer demand is volatile and a cartel, producing a homogeneous product, can only observe the market price but not the production of individual cartel members. In that situation, when the firms observe a lower market price, they cannot perfectly tell whether it is due to someone deviating from their agreement or simply due to weak aggregate demand. Firms can deter cheating by resorting to price wars (by producing more, for example) when the price falls below a certain level. In this framework, when the time firms take to adjust their production becomes shorter, there are two counteracting effects on the sustainability of a cartel. On the one hand, the ability to change their production quickly means that they could start a price war as quickly as they want to. This tends to reduce the incentive to cheat and hence makes a cartel more sustainable. On the other hand, when the demand is noisy and hence the market price moves due to short-term idiosyncratic factors, firms that are constantly watching the market price trying to detect potential cheating will likely receive many idiosyncratic signals of lower prices. Under additional assumptions, the study shows that firms will simply commit too many Type I errors (false positives): that is, start price wars too often to sustain collusion in this environment. An experimental study yields results largely consistent with these theoretical predictions.[104] In fact, some studies have shown that one way a cartel could combat the issue is to deliberately delay the information flow.[105] At a theoretical level, it is not hard to imagine that using algorithms to continuously monitor the market information and enable firms to react quickly could bring the reality closer to the one considered in these research studies.[106]

The effect of algorithms on many other factors is ambiguous.[107] Take asymmetry as an example. In general, economists believe that various forms of asymmetry among competitors tend to make (efficient) collusion more difficult.[108] A leading example is one where competitors have different cost structures (i.e., cost asymmetry). In this case, firms may find it difficult to agree to a common price because a lower-cost firm has an incentive to set a lower price than a higher-cost firm. This tends to make the coordination problem harder. In addition, as a research paper put it, “[E]ven if firms agree on a given collusive price, low-cost firms will be more difficult to discipline, both because they might gain more from undercutting their rivals and because they have less to fear from a possible retaliation from high-cost firms.”[109]

Even if firms use the same algorithm provider, they are likely to customize their versions of the algorithm. As a simple example, imagine that some developers tell us that their algorithm is going to increase our profit. But what profit? Certainly, an algorithm that aims to maximize short-term profit is not going to behave the same way as an algorithm that aims to maximize long-term profit.[110] Similarly, we also expect an algorithm to incorporate firm-specific cost information or objectives in its decision-making process. For example, Feedvisor, a provider of pricing algorithms for third-party Amazon marketplace sellers, states that its “pricing strategies for each individual SKU can be set based on a seller’s business objectives, such as revenue optimization, profit, or liquidation.” [111] That is, even if the algorithms adopted by competitors have the same structure and capability, they do not necessarily or automatically eliminate asymmetry. In fact, the algorithms are typically expected to reflect, if not exacerbate, existing asymmetries. Finally, pricing algorithms, by themselves, are unlikely to affect many other structural characteristics, especially those related to demand and firms’ product offerings.

It is worth emphasizing that these structural factors only predict which markets are more susceptible to coordination, not whether market participants are explicitly or tacitly colluding.

D. Implications for Antitrust Compliance and Policy

Despite the significant technical challenges in designing tacitly collusive algorithms and the ambiguous economic relationship between algorithms and collusion, the AI and economic research I surveyed above clearly shows that algorithmic collusion is possible. If there is one lesson we have learned from past experiences, it is that predicting the future of technology is notoriously difficult. In this section, I discuss the reasons we should stay vigilant and what we can do to combat the risk of algorithmic collusion.

As a starting point, a potentially effective antitrust policy is to explicitly prohibit the development and deployment of autonomously collusive algorithms. Consider the algorithmic design that equips an algorithm with the ability to communicate. In a study discussed earlier, the algorithm’s capability to learn to cooperate and maintain cooperation (Crandall et al, 2018) is significantly improved when it can communicate with others (including human counterparts) through costless, non-binding messages (“cheap talk”). That research demonstrates that, just like humans, the ability to communicate can be a key to forging a cooperative relationship among competing algorithms.[112] Recognizing the importance of such non-binding signals, the lead author of that study argued in a recent article that “research into developing algorithms that better utilize non-binding communication signals should be more abundant” because “non-binding communication signals are not being given sufficient attention in many scenarios and algorithms considered by AI researchers. . . .”[113] The author also believes that “the potential value of better using non-binding communication signals often outweighs” the challenges in doing so.[114] He went on to propose a two-step strategy, the first step of which is for AI algorithms to “learn (or being given) a shared-communication protocol.”[115] As the author noted, several strands of AI research, including on automated negotiation[116] and vocabulary alignment[117] can further improve and even automate algorithmic communication. Future research along these lines could accelerate the development of algorithms capable of reaching and sustaining collusion. Designs that enable pricing algorithms to communicate and even negotiate with competitors (humans or algorithms) with the goal of achieving collusion put consumer welfare at risk even if such capability is encoded in machine-readable syntax and humans do not participate in the actual communication or negotiation.[118]

I also discussed AI studies in which researchers were able to design cooperative algorithms by carefully modifying their objective.[119] Any such design modifications with the goal of ensuring or eliciting cooperative behavior from competitors are another example of problematic conduct, regardless of whether supra-competitive prices are ultimately set “autonomously” by the algorithms. More generally, absent procompetitive justifications, basing the design of pricing algorithms on those already known to elicit and maintain cooperation is highly suspicious. The success of such an approach in experimental studies is another reason why we should remain vigilant.[120]

The current research has more to offer. For example, antitrust scholars have advocated more research to understand “collusive” features of an algorithm. Ezrachi and Stucke called this type of research a “collusion incubator.”[121] Harrington went a step further and proposed a detailed research program and discussed its promises and challenges.[122] Specifically, he proposed to create simulated market settings to test and identify algorithmic properties that support supra-competitive prices. AI researchers, in their pursuit of robots capable of cooperating with others, have laid some important groundwork for this effort. On this point, let’s take a closer look at an instructive algorithmic taxonomy discussed in a recent AI study.[123]

Based on this particular taxonomy, algorithms such as TFT are examples of the type of algorithms known as Leaders. Leader algorithms “begin with the end in mind” and “focus on finding a ‘win-win’ solution” by pursuing an answer to the question of what desirable outcome is likely to be acceptable to the counterpart. And once the outcome (e.g., joint-monopoly price) is selected by Leaders, they would then stick to it, as long as their counterparts cooperate and “otherwise punish deviations from the outcome to try to promote its value.”[124] This is precisely the type of problematic reward and punishment scheme discussed by Harrington (2019). If for one reason or another, the counterpart does not accept the outcome, the more flexible Builders algorithms would move on to iteratively seek for consensus and compromises. An example of this type of Builder algorithm is the expert system proposed by Crandall et al (2019) that I discussed earlier. From an antitrust perspective, this is a helpful taxonomy in that it suggests that Leader and Builder algorithms are probably what we should be most concerned about.

1. A Comment on Digital Markets[125]

Before we move on to the lessons for antitrust compliance more specifically, I want to comment on the implications of pricing algorithms on digital markets. Not surprisingly, as more and more businesses are moving online and have at least some online presence, “digital” market is becoming increasingly encompassing. However, many would probably agree that a quintessential digital market should have the following characteristics:

- Prices are posted online, transparent, and potentially scrapable; and

- Competitors sell largely homogenous products either on the same online store (e.g., Amazon) or on their own online websites (e.g., Staples.com and OfficeDepot.com).

In fact, the simulated markets in which Calvano et al (2020) and Klein (2019) demonstrated the possibility of tacit collusion by self-learning algorithms share these characteristics. So, there are good reasons why this type of markets is of great interest and potentially more susceptible to algorithmic collusion. More generally, to the extent that a market already exhibits structural characteristics that are conducive to coordination, the CMA report argues that “algorithmic pricing may be more likely to facilitate collusion.”[126]

As Schwalbe (2018) pointed out, most of the earlier literature on the risk of algorithmic collusion in this type of digital market, however, is illustrated not by self-learning AI algorithms but by simple deterministic pricing rules, most commonly, price-matching ones. Intuitively, if algorithms enable a seller to continuously monitor competitor prices and automatically match them, there would be less competitive pressure and thus less incentive to lower prices to begin with, especially when the algorithms can detect price changes and react instantaneously. This impact on firms’ incentives is no different from other types of price guarantees, however, and “does not pose any novel problems for competition that would not occur, for example, with the widespread use of price guarantees.”[127]

We have already discussed the relationship between market transparency and tacit collusion and, in particular, when transparency could facilitate or hinder (algorithmic) collusion. Even in situations where transparency unequivocally facilitates collusion, it is not implausible that oligopolistic firms sophisticated enough to use complex pricing algorithms, upon seeing a profitable deviation, would use technologies or other workarounds to obscure transparency and secure higher profits. The simple practice of allowing a customer to see the price only after the item is added to their shopping cart would not deter automated price scraping but could make competitor price tracking more difficult.[128] They can and indeed do deny web scraping altogether, especially if it is determined that the scraping is being done by an algorithm. If firms do not want to wholesale-block information scraping by competitors (for example, when they are engaging in some kind of tacit or explicit collusion), they could in theory selectively change the information displayed depending on who is visiting, potentially defeating automated price monitoring.[129] That is, if algorithms can facilitate tacit collusion, there do not appear to be a strong a priori reason that technologies would not be able to facilitate deviation as well. Which of these incentives dominates is an empirical question.

III. Exploring Algorithmic Antitrust Compliance

Having explored the evidence of algorithmic collusion, we turn to another pertinent question: If pricing algorithms could autonomously collude, can they be made antitrust-compliant as well? Many have started pondering this after a series of public comments by EU competition officials in recent years. Explaining this concept, the EU Competition Commissioner Margrethe Vestager stated in a recent speech that “[w]hat businesses can—and must—do is to ensure antitrust compliance by design. That means pricing algorithms need to be built in a way that doesn’t allow them to collude.”[130] She later elaborated on her view at another conference: “[s]ome of these algorithms will have to go to law school before they are let out. You have to teach your algorithm what it can do and what it cannot do, because otherwise there is the risk that the algorithm will learn the tricks. . . . We don’t want the algorithms to learn the tricks of the old cartelists. . . . We want them to play by the book also when they start playing by themselves.”[131] Another senior EU official echoed the view that firms should program “software to avoid collusion in the first place”[132] and that “[r]espect for the rules must be part of the algorithm that a company configures and for whose behavior the company will be ultimately liable.”[133]

As desirable as antitrust compliance by design is, Simonetta Vezzoso pointed out that the implementation may not be straightforward: “[w]hile the idea of competition compliance by design might be gaining some foothold in the mind-sets of some competition authorities, there are currently no clear indications how it could be integrated into the already complex competition policy fabric.”[134] Indeed, what does it mean to “program compliance with the Sherman Act?” That is the question that Joseph Harrington asked in a recent paper. He concluded that all that the current jurisprudence tells us is to make sure algorithms do not “communicate with each other in the same sense that human managers are prohibited from communicating” under the Sherman Act.[135] But as both Vezzoso and Harrington suggested, there is more we could do.

In this section, I discuss several potential pathways to algorithmic compliance and argue that a robust compliance program should take a holistic and multi-faceted view. Specifically, I will look at a monitoring approach to compliance, then venture into the harder problem of designing compliant algorithms from the ground up. I will also discuss some existing proposals, draw additional lessons from the recent AI literature, and finally present potential technical frameworks, inspired by the current machine learning literature, for compliant algorithmic design.[136]

A. Algorithmic Compliance: A Monitoring Approach

The first approach is to use automated monitoring as a compliance tool. Despite not being the type of competition by design that would immediately come to mind, these algorithmic tools can be an important component of a compliance program. Instead of trying to dictate the design process, these tools monitor the behavior of humans as well as algorithms. The main advantage of this approach is that it does not attempt to open the black box of complicated computer programs; it focuses instead on the relevant firm behaviors that can be observed and interpreted.

Directly monitoring the “symptoms” of an antitrust violation is the most straightforward starting point. These “symptoms” are often referred to as plus factors or collusive markers. More formally, Kovacic, et al., define plus factors as “economic actions and outcomes, above and beyond parallel conduct by oligopolistic firms, that are largely inconsistent with unilateral conduct but largely consistent with explicitly coordinated action.” [137] They further define the super plus factors as the strongest of such factors. For example, unexplainable price increases or other types of abnormalities in prices have been recognized as such plus factors.[138] There is by now a robust “cartel screen” literature that studies empirical approaches and designs algorithms to detect such price anomalies.[139] And with adequate data and necessary analytical capabilities, empirical screening algorithms could be an important addition to an algorithmic compliance program.[140]

Michal Gal (2019) proposed several plus factors directly related to the use of pricing algorithms. For example, she argues that red flags should be raised if firms “consciously use similar algorithms even when better algorithms are available to them” or if “firms make conscious use of similar data on relevant market conditions even when better data sources exist,” among others.[141]

The second approach to algorithmic compliance has seen increasing adoption and success in the Regulatory Technology (RegTech) industry where AI technologies are being deployed to help companies meet their regulatory compliance needs. RegTech as an industry, often labelled as the new FinTech, or Financial Technology, has seen rapid growth in recent years.[142] Several RegTech companies currently offer AI-based compliance technologies based on natural language processing (NLP) and natural language understanding (NLU). According to one company, their NLP/NLU technology can “detect intentions, extract entities, and detect emotions” in human communications.[143] This type of technology could also be used to monitor communications among competitors to detect, and hence potentially deter, collusive behavior. Indeed, evidence of interfirm communications played a critical role in the investigation of a number of international cartel cases. With NLP and NLU AI technologies, machines can potentially flag problematic communications in real time in a cost-effective manner.

Despite the active and promising research in collusive markers and their uses for cartel detection and monitoring, developing these monitoring algorithms is by no means a trivial exercise. Collecting adequate and quality data is almost always the very first step. To the extent that a company or an antitrust agency wants to incorporate these tools in a compliance or a monitoring program, analytical capabilities may also be necessary.[144] Equally important to keep in mind is that findings of plus factors should typically lead to further investigation as there may be legitimate reasons for a specific conduct or market outcome.

B. Algorithmic Compliance: Compliance by Design

A much more challenging task is identifying specific algorithms or algorithmic features that should or should not be built in, a question that many may have in mind when thinking about compliance by design. But as discussed extensively above, existing AI research has given us many insights. Design features that have been exploited to achieve cooperation include the capability to communicate, use of a planning agent, modified objectives, and potentially other features guided by the answers to the questions posed by Leader– and Builder-type algorithms.

In the rest of this section, I discuss potential ways to implement compliance by design when we do not necessarily have knowledge about the problematic features beforehand or when it is difficult to isolate the properties that lead to supra-competitive pricing.

1. Looking Forward: A Research Proposal

Vezzoso highlighted the significant challenges in programming antitrust law directly into algorithms. She noted that “[p]rogrammers must articulate their objectives as ‘a list of instructions, written in one of a number of artificial languages intelligible to a computer’. . . . The flexibility of human interpretations, meaning the possibility that legal practitioners interpret norms and principles differently and that legal interpretation evolves over time, may conflict with the apparent stiffness of computer language. . . . The degree to which competition law is, or should be, suitable for automation is an interesting yet neglected topic.”[145] Indeed, given how concise the Sherman Act is, most legal scholars, if not all, would agree that turning the Sherman Act into a set of specific if-then type instructions is a tall order, if not outright impossible.

But here is what’s particularly interesting about how programmable antitrust laws are: The lack of (traditional) programmability is precisely the problem that modern machine learning and AI technologies are designed to circumvent. Consider the task of automatically recognizing and distinguishing cats and dogs in images. The traditional rule-based computer programming approach would be to enumerate all the physical differences between cats and dogs. But given how many subtle physical differences and similarities there are between cats and dogs, it gets difficult very quickly to improve classification accuracy. The standard (supervised) machine learning approach circumvents this problem by providing a large number of examples that consist of inputs (images) and associated outputs (the “label” describing whether the image is a cat or a dog) to a statistical model. A large number of such examples (i.e., training data) allows the model to search for the most predictive inputs, as well as the best way to map these inputs to the correct output, all without relying on rules that humans must painstakingly write down. This tells us that, perhaps, we also do not need to write down all the explicit instructions of antitrust compliance. Fortunately, economists and courts have identified a set of indicators predictive of collusive conduct. These are the plus factors I discussed above in the context of a monitoring approach to antitrust compliance. The question we address in this section is whether these predictors and the algorithms designed to monitor them can contribute directly to the design of antitrust-compliant algorithms, and if so, how.[146]